Maximizing DOM Performance! ⚡

No, you don't need a 3rd party library or framework. You and I can performantly work with a large number of items in our DOM using just a handful of JavaScript techniques.

Hi everybody!

One of the biggest misconceptions perpetuated by various frameworks is that you can’t performantly add, remove, and manipulate a large amount of DOM elements without using their super awesome library. That was true in 2014 when frameworks like React were gaining traction, and things like the virtual DOM were game changers. In the years since, the various browsers and their respective rendering engines and JavaScript runtimes have greatly gotten snappier! ⚡

Below is a chart of Chrome’s Speedometer 1 benchmark scores from 2013 to 2018:

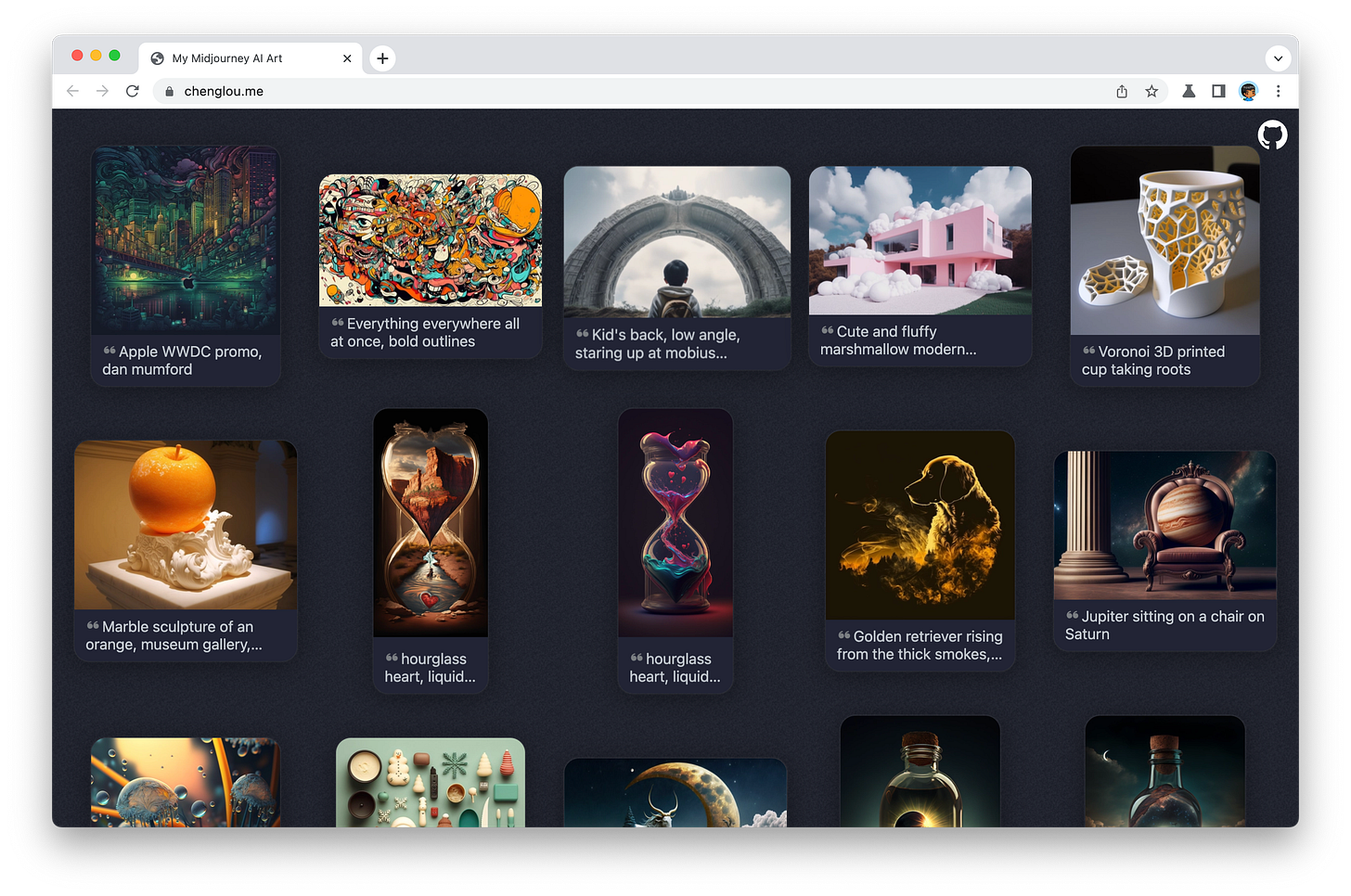

Today, if you want to create really performant data-intensive web apps where DOM elements are added and removed on the fly, you can totally pull them off using vanilla JavaScript techniques. To see this in action, look no further than Cheng Lou’s art gallery:

While I encourage you to visit and interact with his gallery yourself, you can see a highlight reel of me playing with it if you prefer.

If you want even more insights into how Cheng thinks about building scalable and performant apps, my interview with him is just what you need!

Basic Techniques

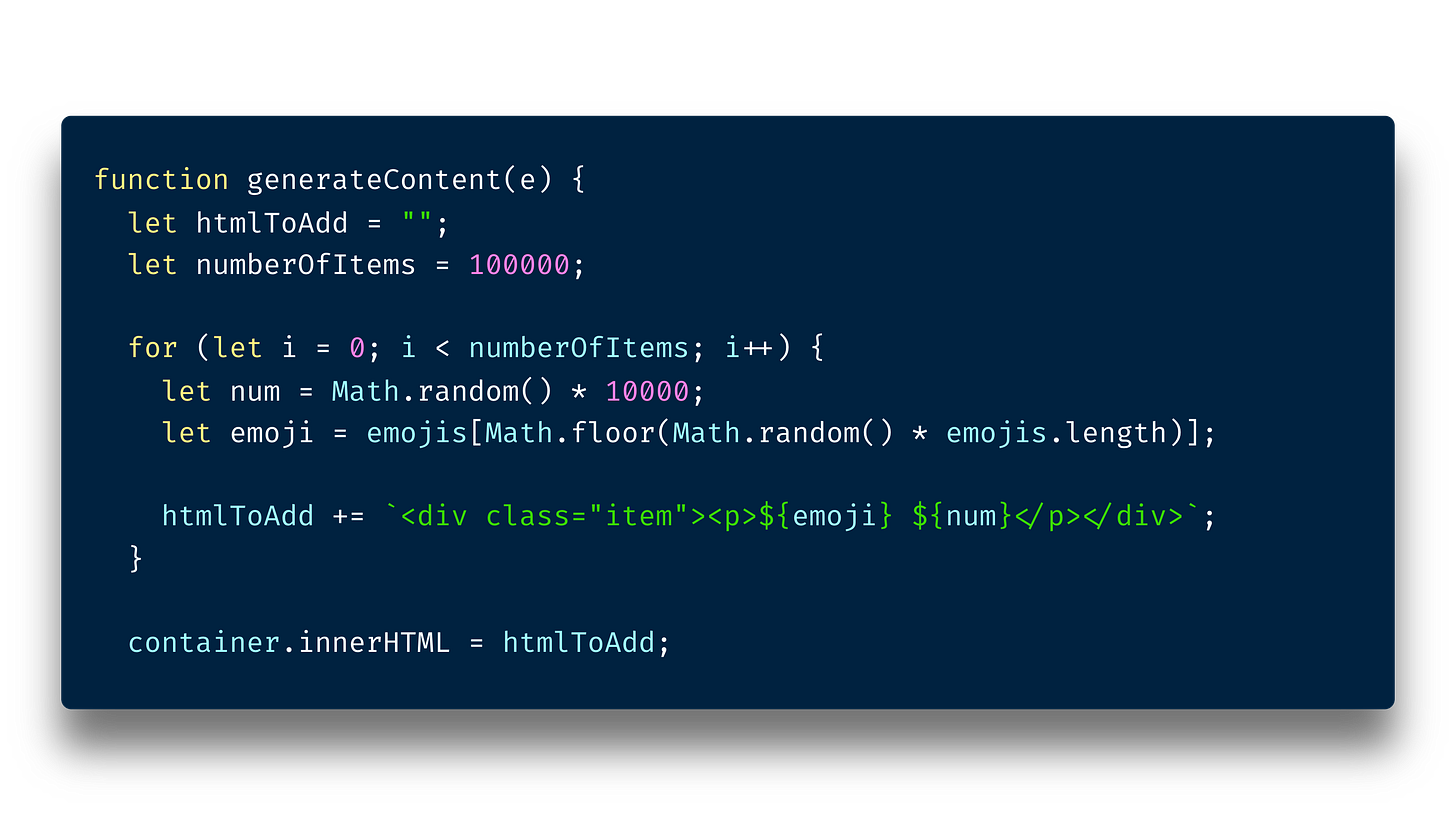

In my Quickly Adding Many Elements to the DOM article (and video), I dive deep into this topic. We look at multiple approaches for performantly adding elements to the DOM and their pros/cons. The fastest approach, at least from my testing, was the innerHTML approach:

Some of the other approaches include using DocumentFragment elements to create in-memory versions of our DOM.

One of the main things we want to avoid is incurring a layout penalty for each item we add to the DOM. What we should ensure is we bombard our DOM with our new content exactly once. In other words, we separate the DOM generation part from the assigning to the DOM part. In the above snippet, notice that our DOM generation is handled inside the for loop where our htmlToAdd variable is storing the HTML content we eventually want to add to the DOM. Once the for loop has run to completion, only then do we assign the contents of our htmlToAdd variable to our DOM:

container.innerHTML = htmlToAdd;This ensures we perform the expensive layout calculations just once.

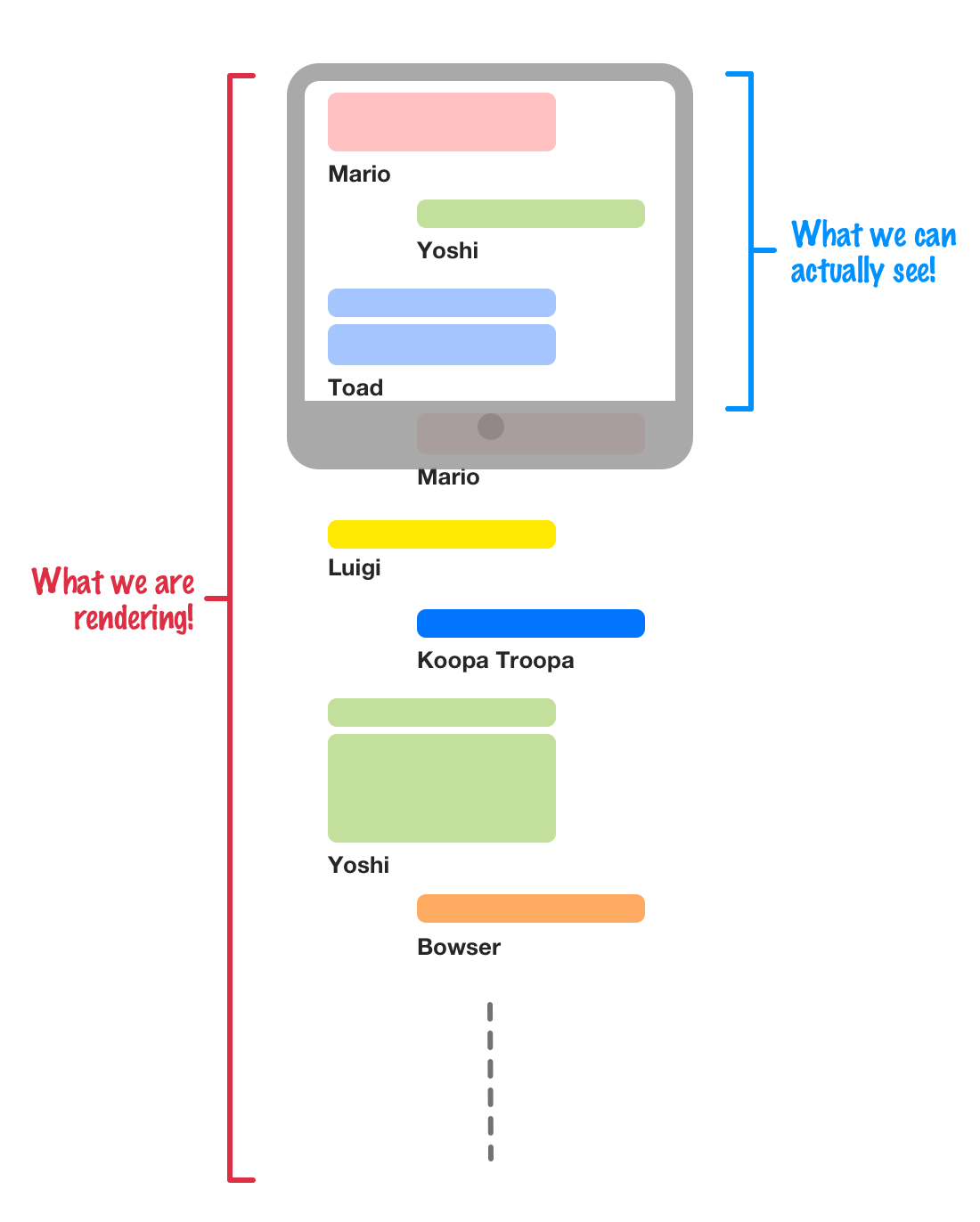

Now, there are additional techniques that we can use in certain situations if the final DOM structure ends up being too complex. One solution is virtualization. The idea with virtualization is that we only render the content that we actually see.

In many cases, without virtualization, we will have our browser do a whole lot more work than necessary when dealing with a lot of DOM elements:

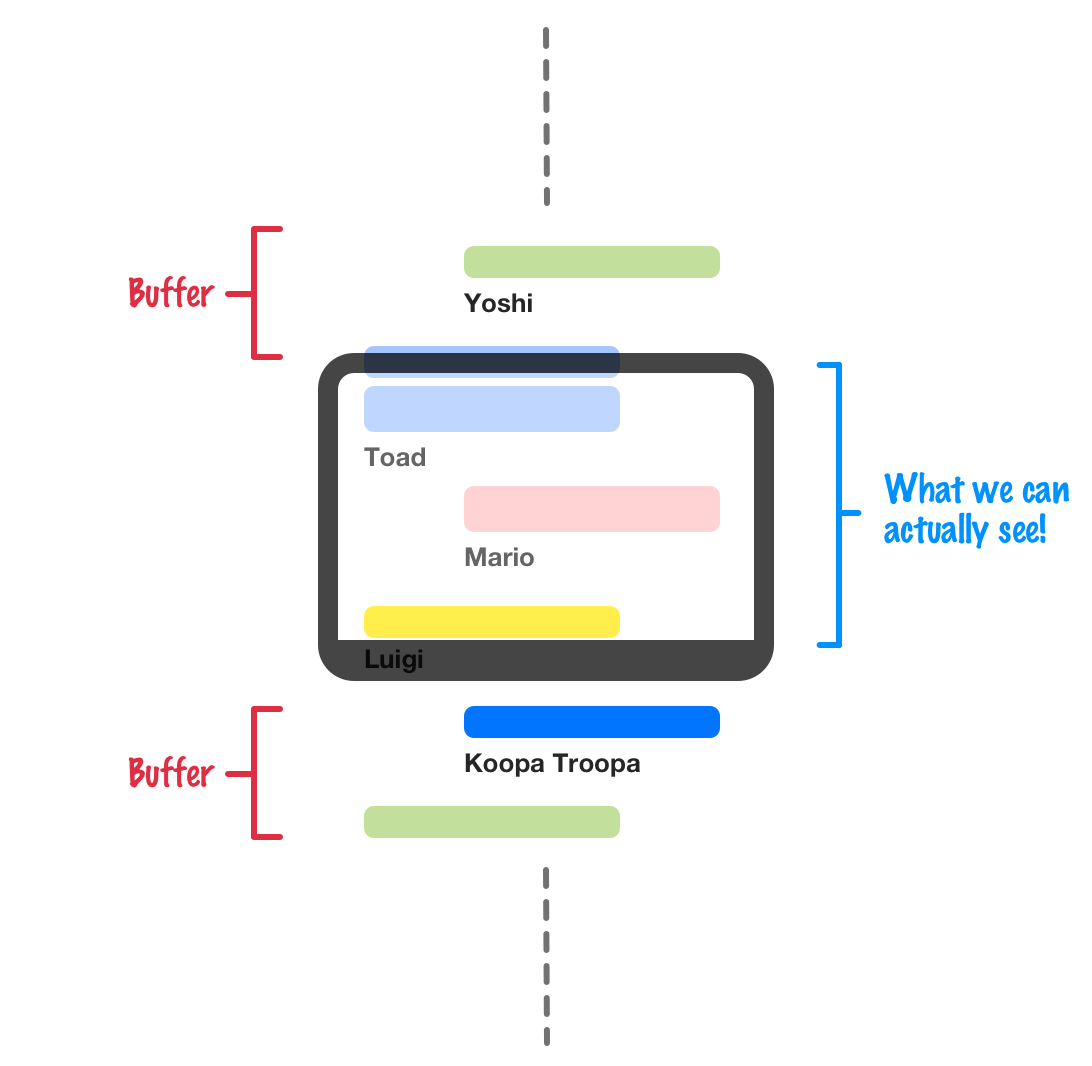

What if we ended up doing the heavy lifting only for the content we see and dealing with content that is outside of our viewport on an as-needed basis:

That is the general idea behind UI virtualization, and you can see this technique also being employed in Cheng’s gallery as well as many popular data-intensive apps like Netflix, Hulu, Dropbox, and more.

Conclusion

There are many architectural reasons why we just use 3rd party libraries and frameworks to build our web apps. Performance, especially DOM performance, shouldn’t be one of them. This is thanks to the great work all of our browsers have done in fixing common performance bottlenecks. They also don’t seem to be slowing down, with more perf improvements headed our way.

Until next time, feel free to reach out to me on Twitter or post on the forums if you want to say “Hi!”.

Cheers,

Kirupa 😀